Incentives and Business Development in Telecommunications

The telecommunications industry is changing faster than ever. The growing proliferation of competitors forces operators to consider new ways of being relevant to customers and businesses. Many companies have decided to become digital service providers, with the aim of meeting the needs of increasingly demanding consumers.

Telecommunications companies have endured a decade of continual challenges, with the industry subjected to a series of disruptions that push them to innovate to avoid being left behind. The smartphone revolution has led consumers to demand unlimited data and connectivity over other services.

Some studies show that the main challenges facing telecoms operators are growing, disruptive competition, agility, and investment, from which four key messages are drawn for understanding the future of the sector:

1. Disruptive competition tops the list of sector challenges

Platforms like WhatsApp-Facebook, Google and Amazon have redefined the customer experience by providing instant messaging services, which have had a direct impact on demand for services such SMS, drastically decreasing it.

Additionally, the market trend is to offer multi-service packages and to enable the customer to customise them according to their own needs, leading to mergers, acquisitions and partnerships between companies, in order to offer ever more diverse services.

2. Commitment to digital business models and innovation in the customer experience

The great opportunities offered by digitisation have made it the concept that the vast majority of companies in the sector aspire to. It is not surprising that in the telecommunications sector too, attempts are being made to move towards a digital business model.

According to the Vodafone Enterprise Observatory, 53% of companies understand digitisation as the use of new technologies in their business processes and 45% as the use of new technologies to improve customer service.

3. The post-2020 landscape will be transformed by 5G

The new generation of mobile telephony, 5G, that will revolutionise not only the world of communications but the industry of the future, has just reached Spain. The four domestic operators – Telefónica, Orange, Vodafone and MásMóvil – have already launched the first commercial 5G services, although only in major cities, with reduced coverage and greatly limited technical capabilities. This early start has also been influenced by the change that has occurred due to the COVID-19 pandemic, which has revealed the need for good quality connection at all times for smart working, digital education, on-line shopping and the explosion of streaming. Spain has Europe’s most powerful fibre network, but there are still regions without coverage. Thanks to full commitment to FTTH (fibre-to-the-home), Spain has a stable connection that runs from the telephone exchange to home directly. According to data from the Fibre to the Home Council Europe 2020, Spain has more fibre-connected facilities (10,261) than France, Germany, Italy and the United Kingdom put together.

The operators play a leading role with these needs for digitisation.

Measures to be taken into account

Achieving such long-awaited digitisation is not an easy process, and it requires a change in organisational mentality, structure and interaction.

While talent is believed to be a key element for digital transformation, and a lack of digital skills is perceived to be a barrier to that transformation, actions say otherwise. Because only 6% of managers consider growth and retention of talent to be a strategic priority.

Workers’ perspective on their level of work motivation:

- 40% feel undervalued and unappreciated by their company. This increases the likelihood that employees will look for another job that will give them back their motivation to work.

- 77% of workers acknowledge that they would get more involved in their work if their achievements were recognised within the organisation.

- Over 60% of people state that an incentives or social benefits programme contributes to them not wanting to look for another job. This is something for companies to take into account, because it is estimated that retaining talent can generate increases in company profits of between 25% and 85%.

Companies’ perspective on their employees’ level of work motivation:

- 56% of managers of people say they are “concerned” about their employees leaving the company.

- 89% of companies believe that the main reason their workers look for another job is to go for higher wages. However, only 12% of employees who change company earn more in their new jobs, demonstrating that it is not economic remuneration alone that motivates the change.

- 86% of companies already have incentives or recognition systems for their employees.

So, beyond the changes and trends set to occur in this sector, Telecommunications companies need to intensify their talent retention and make it a priority to address all the challenges they face on their journey to digitisation.

A very important measure for retaining and attracting talent is work incentives. Work incentives are compensations to the employee from the company for achieving certain objectives. This increases worker engagement, motivation, productivity and professional satisfaction.

As a result, companies in the sector are increasingly choosing to develop a work incentives programme, where they have previously studied and planned the appropriate and most suitable incentives, depending on the company and the type of employees, with the aim of motivating their workers to increase their production and improve their work results.

In the case of the communications sector, these measures will also increase company sales and profits. Within this sector, sales are made through distributors, agencies, internal sales and own stores, aimed both at individual customers and companies. That is why such importance is given to the sales force, leading to more highly motivated sales people with greater desire to give the best of themselves every day, so leading to improved company profits.

Furthermore, all the areas associated with sales, departments that enable, facilitate and ensure the healthiness of sales, as well as customer service, will be subject to incentives.

For an incentive system to be effective, it is essential for it to be well-defined, well-communicated, understandable and based on measurable, quantifiable, explicit and achievable objectives.

Work incentives may or may not be economic. For the employee, it needs to be something that recompenses or rewards them for their efforts. Only in that way will the incentives plan be effective.

Finally, once the incentives plan has been established, the company needs to assess it regularly, because in a changing environment such as the present, company objectives, employee motivations and the market will vary. To adapt to changes in the market and to the various internal and external circumstances, it will need to evolve over time.

What advantages do incentive systems offer telecoms companies?

Implementing an incentives plan in the company has numerous benefits for workers, but also for companies it:

- Improves employee productivity

- Attracts qualified professionals

- Increases employee motivation

- Assesses results

- Encourages teamwork

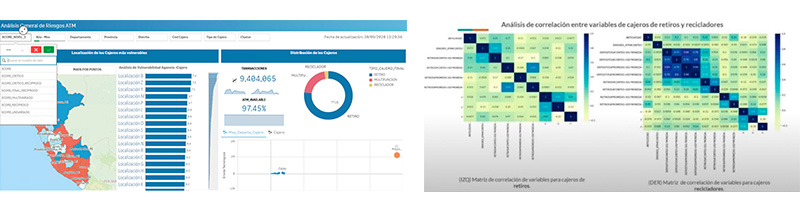

In one of our telecoms clients, /bluetab has developed an internal business tool to calculate incentives for the various areas associated with sales. The work incentives are economic in this case, and performance assessment, associated with meeting their objectives, consists of an economic percentage of their salary. Achieving a series of objectives measures contribution to profitable company growth over a period of time.

The following factors are taken into account in developing the incentives calculation:

- Policy: Definition and approval of the incentives policy for the various sales segments and channels by HR.

- Objectives: Distribution of company objectives as spread across the various areas associated with sales.

- Performance: Performance of the sales force and areas associated with sales over the periods defined previously in the policy.

- Calculation: Calculation of performance and achievement of objectives, of all the profiles included in the incentive policy.

- Payment: Addition of payment to the payroll for the corresponding performance-based incentives. Payments will be bimonthly, quarterly, semi-annual or annual.

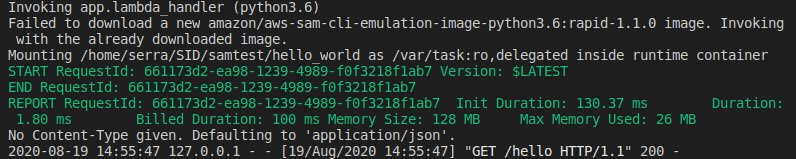

How do we do it?

/bluetab develops tools for tracking the achievement of objectives and calculation of incentives. This allows everyone related to sales, to whom this model applies, to track their results, as well as the various departments related to their decision, human resources, sales managers, etc.

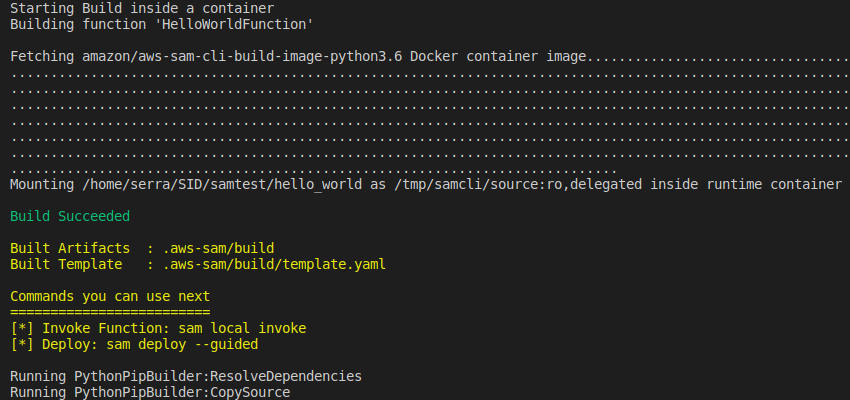

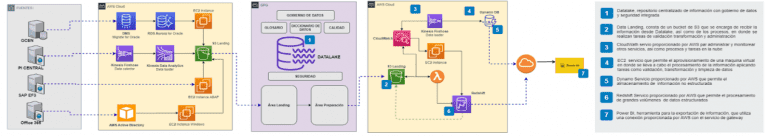

The most important thing in developing these types of tools is to analyse all the client’s needs, gather all the information necessary for calculating the incentives and fully understand the policy. We analyse and compile all the data sources needed for subsequent integration into a single repository.

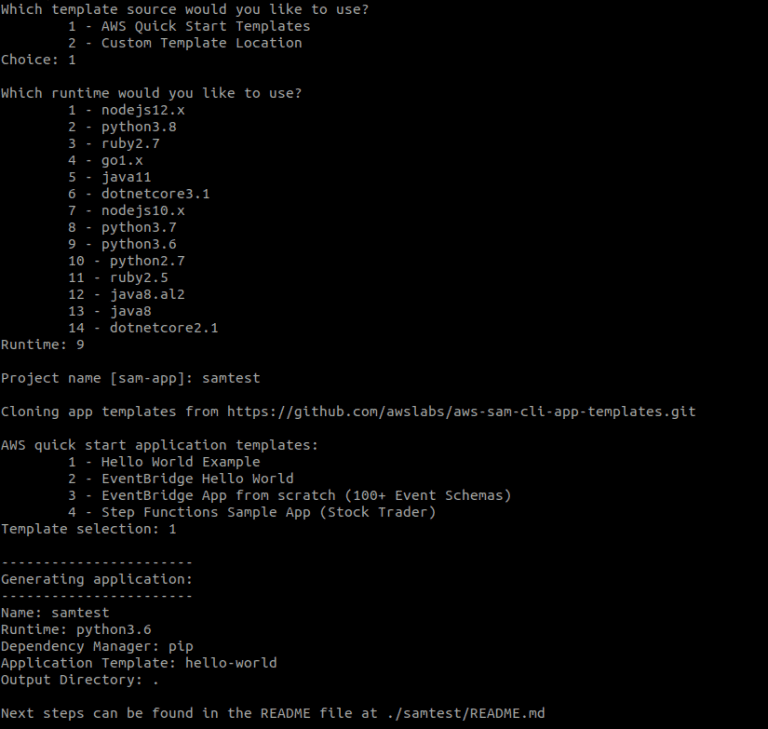

The various data sources may be Excel, csv or txt files, the customer’s various information systems, such as Salesforce, offer configuration tools, database systems (Teradata, ORACLE, etc.). The important thing is to adapt to any environment in which the client works.

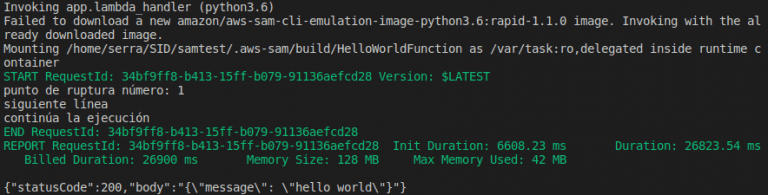

We typically use processes programmed in Python to extract from all the data sources automatically. We then integrate all the resulting files using ETL processes, performing all the necessary transformations and loading the transformed data into a database system as a single repository (e.g. Teradata).

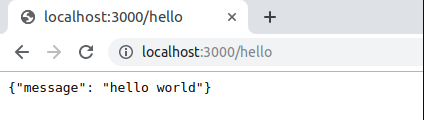

Finally, we connect the database to a data visualisation tool, such as Power BI. All the incentives calculations are implemented in that tool. Scorecards are then published to share this with the various users, providing security both at access and data protection levels.

As an added value, we include forecasts in two different ways. The first is based on data provided by the customer, reported in turn by the sales force. The second by integrating predictive analysis algorithms using Python, Anaconda, Spider, R which, based on a historical record of the various KPIs, enables estimation of future data with low margins of error. This allows for prediction of the results of future incentives.

Additionally, simulations of the various scenarios can be carried out, using parameters, for calculation of the objectives and achievement of incentives.

The/bluetab tool developed will enable departments affected by incentives to perform daily, weekly, monthly or yearly monitoring of their results in a flexible, dynamic, agile manner. As well as allowing the departments involved in the decisions to monitor the data, it will also enable them to improve future decision making.

Benefits provided by /bluetab

- Centralisation of information, the chance to perform calculation and monitoring using a single tool.

- Higher updating frequency: going from monthly and semi-annual updating in some cases to daily, weekly and real-time on occasions.

- Reduction of 63% in time spent on manual calculation tasks.

- Greater traceability and transparency.

- Scalability and depersonalisation of reporting.

- Errors from manual handling of multiple different sources reduced by 11%. Data quality.

- Artificial intelligence simulating different scenarios.

- Dynamic visualisation and monitoring of information.

- Improved decision-making at the business level.